zensols.spanmatch package#

Submodules#

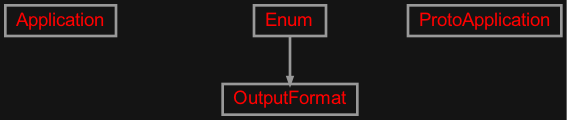

zensols.spanmatch.app#

An API to match spans of semantically similar text across documents.

- class zensols.spanmatch.app.Application(doc_parser, matcher)[source]#

Bases:

objectAn API to match spans of semantically similar text across documents.

- __init__(doc_parser, matcher)#

- doc_parser: FeatureDocumentParser#

The feature document that normalizes (whitespace) parsed documents.

- match(source_file, target_file, output_format=OutputFormat.text, selection=<zensols.introspect.intsel.IntegerSelection object>, output_file=PosixPath('-'), detail=False)[source]#

Match spans across two text files.

- Parameters

source_file (

Path) – the source match filetarget_file (

Path) – the target match fileoutput_format (

OutputFormat) – the format to write the hyperparemtersselection (

IntegerSelection) – the matches to outputoutput_file (

Path) – the output file or-for standard outdetail (

bool) – whether to output more information

- write_hyperparam(output_format=OutputFormat.text)[source]#

Write the matcher’s hyperparameter documentation.

- Parameters

output_format (

OutputFormat) – the format to write the hyperparemters

zensols.spanmatch.cli#

Command line entry point to the application.

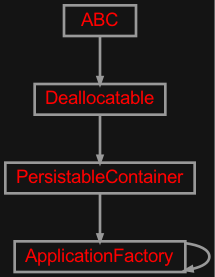

- class zensols.spanmatch.cli.ApplicationFactory(*args, **kwargs)[source]#

Bases:

ApplicationFactory

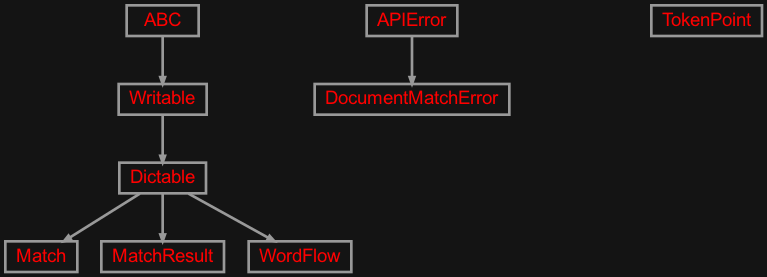

zensols.spanmatch.domain#

Domain and container classes for matching document passages.

- exception zensols.spanmatch.domain.DocumentMatchError[source]#

Bases:

APIErrorThrown for any document matching errors.

- __module__ = 'zensols.spanmatch.domain'#

- class zensols.spanmatch.domain.Match(source_tokens=<factory>, target_tokens=<factory>, flow_values=<factory>)[source]#

Bases:

DictableA span of matching text between two documents.

- __init__(source_tokens=<factory>, target_tokens=<factory>, flow_values=<factory>)#

- asflatdict(*args, include_norm=False, include_text=False, **kwargs)[source]#

Like

asdict()but flatten in to a data structure suitable for writing to JSON or YAML.

- property source_document: FeatureDocument#

The originating document.

- Return type

- property source_lexspan: LexicalSpan#

The originating document’s lexical span.

- Return type

- property source_span: FeatureSpan#

The originating document’s span.

- Return type

- source_tokens: Set[TokenPoint]#

The originating tokens from the document.

- property target_document: FeatureDocument#

The target document.

- Return type

- property target_lexspan: LexicalSpan#

The target document’s lexical span.

- Return type

- property target_span: FeatureSpan#

The target document’s span.

- Return type

- target_tokens: Set[TokenPoint]#

The target tokens from the document

- property total_flow_value: float#

The sum of the

flow_values.- Return type

- write(depth=0, writer=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>, include_tokens=False, include_flow=True, char_limit=9223372036854775807)[source]#

Write this instance as either a

Writableor as aDictable. If class attribute_DICTABLE_WRITABLE_DESCENDANTSis set asTrue, then use thewrite()method on children instead of writing the generated dictionary. Otherwise, write this instance by first creating adictrecursively usingasdict(), then formatting the output.If the attribute

_DICTABLE_WRITE_EXCLUDESis set, those attributes are removed from what is written in thewrite()method.Note that this attribute will need to be set in all descendants in the instance hierarchy since writing the object instance graph is done recursively.

- class zensols.spanmatch.domain.MatchResult(keys, source_points, target_points, source_tokens, target_tokens, cost, dist, matches=None)[source]#

Bases:

DictableContains the lexical text match pairs from the first to the second document given by

Matcher.match().- __init__(keys, source_points, target_points, source_tokens, target_tokens, cost, dist, matches=None)#

- cost: ndarray#

The earth mover distance solution, which is the cost of transportation from first to the second document.

- keys: List[str]#

The :obj:`.TokenPoint.key`s to tokens used to normalize document frequencies in the nBOW.

- property mapping: Tuple[WordFlow]#

Like

flowsbut do not duplicate sources- Return type

Tuple[WordFlow]

- source_points: List[TokenPoint]#

The first document’s token points.

- source_tokens: Dict[str, List[TokenPoint]]#

The first document’s token points indexed by the

TokenPoint.key.

- target_points: List[TokenPoint]#

The second document’s token points.

- target_tokens: Dict[str, List[TokenPoint]]#

The first document’s token points indexed by the

TokenPoint.key.

- property transit: np.ndarray#

np.ndarray

- Type

rtype

- write(depth=0, writer=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>, include_source=True, include_target=True, include_tokens=False, include_mapping=True, match_detail=False)[source]#

Write this instance as either a

Writableor as aDictable. If class attribute_DICTABLE_WRITABLE_DESCENDANTSis set asTrue, then use thewrite()method on children instead of writing the generated dictionary. Otherwise, write this instance by first creating adictrecursively usingasdict(), then formatting the output.If the attribute

_DICTABLE_WRITE_EXCLUDESis set, those attributes are removed from what is written in thewrite()method.Note that this attribute will need to be set in all descendants in the instance hierarchy since writing the object instance graph is done recursively.

- class zensols.spanmatch.domain.TokenPoint(token, doc)[source]#

Bases:

objectA token and its position in the document and in embedded space.

- __init__(token, doc)#

- doc: FeatureDocument#

The document that contains

token.

- property embedding: np.ndarray#

The token embedding.

- Return type

np.ndarray

- token: FeatureToken#

The token used in document

docused for clustering.

- class zensols.spanmatch.domain.WordFlow(value, source_key, target_key, source_tokens, target_tokens)[source]#

Bases:

DictableThe flow of a word between two documents.

- __init__(value, source_key, target_key, source_tokens, target_tokens)#

- source_key: str#

The

TokenPoint.key. of the originating document.

- source_tokens: Tuple[TokenPoint]#

The originating tokens that map from

source_key.

- target_key: str#

The

TokenPoint.key. of the target document.

- target_tokens: Tuple[TokenPoint]#

The target tokens that map from

target_key.

- write(depth=0, writer=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>, include_tokens=False)[source]#

Write this instance as either a

Writableor as aDictable. If class attribute_DICTABLE_WRITABLE_DESCENDANTSis set asTrue, then use thewrite()method on children instead of writing the generated dictionary. Otherwise, write this instance by first creating adictrecursively usingasdict(), then formatting the output.If the attribute

_DICTABLE_WRITE_EXCLUDESis set, those attributes are removed from what is written in thewrite()method.Note that this attribute will need to be set in all descendants in the instance hierarchy since writing the object instance graph is done recursively.

zensols.spanmatch.match#

Implements a method to match sections from documents to one another.

- class zensols.spanmatch.match.Matcher(dtype=<class 'numpy.float64'>, hyp=None)[source]#

Bases:

objectCreates matching spans of text between two documents by first using the word mover algorithm and then clustering by tokens’ positions in their respective documents.

- __init__(dtype=<class 'numpy.float64'>, hyp=None)#

- dtype#

The floating point type used for word mover and clustering.

alias of

float64

- hyp: HyperparamModel = None#

The model’s hyperparameters.

Hyperparameters:

:param cased: whether or not to treat text as cased :type cased: bool :param distance_metric: the default distance metric for calculating the distance from each embedded :class:`.tokenpoint`. :see: :function:`scipy.spatial.distance.cdist` :type distance_metric: str; one of: descendant, ancestor, all, euclidean :param bidirect_match: whether to order matches by a bidirectional flow :type bidirect_match: str; one of: none, norm, sum :param source_distance_threshold: the source document clustering threshold distance :type source_distance_threshold: float :param target_distance_threshold: the target document clustering threshold distance :type target_distance_threshold: float :param source_position_scale: used to scale the source document positional embedding component :type source_position_scale: float :param target_position_scale: used to scale the target document positional embedding component :type target_position_scale: float :param min_flow_value: the minimum match flow; any matches that fall below this value are filtered :type min_flow_value: float :param min_source_token_span: the minimum source span length in tokens to be considered for matchs :type min_source_token_span: int :param min_target_token_span: the minimum target span length in tokens to be considered for matchs :type min_target_token_span: int

- match(source_doc, target_doc)[source]#

Match lexical spans of text from one document to the other.

- Parameters

source_doc (

FeatureDocument) – the source document from where words flowtarget_doc (

FeatureDocument) – the target document to where words flow

- Return type

- Returns

the matched document spans from the source to the target document