zensols.cnndmdb package#

Submodules#

zensols.cnndmdb.app#

Creates a SQLite database if the CNN and DailyMail summarization dataset.

- class zensols.cnndmdb.app.Application(config_factory, corpus)[source]#

Bases:

objectCreates a SQLite database if the CNN and DailyMail summarization dataset.

- __init__(config_factory, corpus)#

- config_factory: ConfigFactory#

Used to create objects for

load().

zensols.cnndmdb.cli#

Command line entry point to the application.

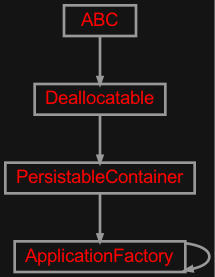

- class zensols.cnndmdb.cli.ApplicationFactory(*args, **kwargs)[source]#

Bases:

ApplicationFactory

zensols.cnndmdb.corpus#

Data access objects (DAO) for the CNN/DailyMail news summarization corpus, which is sourced from a Tensorflow dataset instance, which in turn uses the Abi See `GitHub`_ repo.

- link

- link

GitHub <https://github.com/abisee/cnn-dailymail>

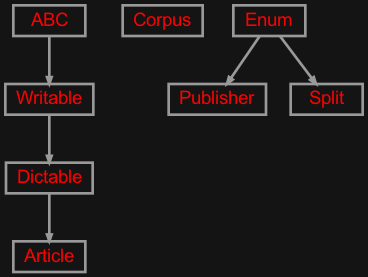

- class zensols.cnndmdb.corpus.Article(id, corp_id, split, publisher, text, highlights=None)[source]#

Bases:

DictableRepresents an article from the CNN/DailyMail corpus.

- __init__(id, corp_id, split, publisher, text, highlights=None)#

- asflatdict(*args, **kwargs)[source]#

Like

asdict()but flatten in to a data structure suitable for writing to JSON or YAML.

- write(depth=0, writer=<_io.TextIOWrapper name='<stdout>' mode='w' encoding='utf-8'>)[source]#

Write this instance as either a

Writableor as aDictable. If class attribute_DICTABLE_WRITABLE_DESCENDANTSis set asTrue, then use thewrite()method on children instead of writing the generated dictionary. Otherwise, write this instance by first creating adictrecursively usingasdict(), then formatting the output.If the attribute

_DICTABLE_WRITE_EXCLUDESis set, those attributes are removed from what is written in thewrite()method.Note that this attribute will need to be set in all descendants in the instance hierarchy since writing the object instance graph is done recursively.

- Parameters

depth (

int) – the starting indentation depthwriter (

TextIOBase) – the writer to dump the content of this writable

- class zensols.cnndmdb.corpus.Corpus(persister, stash)[source]#

Bases:

objectContains access to the CNN/DailyMail corpus.

- __init__(persister, stash)#

- persister: BeanDbPersister#

The DB access object.

zensols.cnndmdb.load#

Classes to populate the database. For data sources see zensols.stash.

- class zensols.cnndmdb.load.DatabaseLoader(persister, chunk_size, dataset_name='cnn_dailymail', split_spec=None)[source]#

Bases:

objectLoads the CNN/DailyMail into a new SQLite database file. If the file already exists, it is deleted. This takes about 2 to load.

- __init__(persister, chunk_size, dataset_name='cnn_dailymail', split_spec=None)#

- persister: BeanDbPersister#

The DB access object.